Thanks for being part of another TestingUy

On September 4, the eleventh edition of TestingUy took place. More than 550 people attended the activities at Antel’s Telecommunications Tower, and over 1,100 people joined via streaming.

The agenda featured thirteen activities: two international-level keynotes, two panels on trending topics, three workshops, and six talks, delivered by 26 speakers. In addition, we offered live interview spaces to make the most of the full day.

Call for speakers

Final call! Submit an activity until July 20.

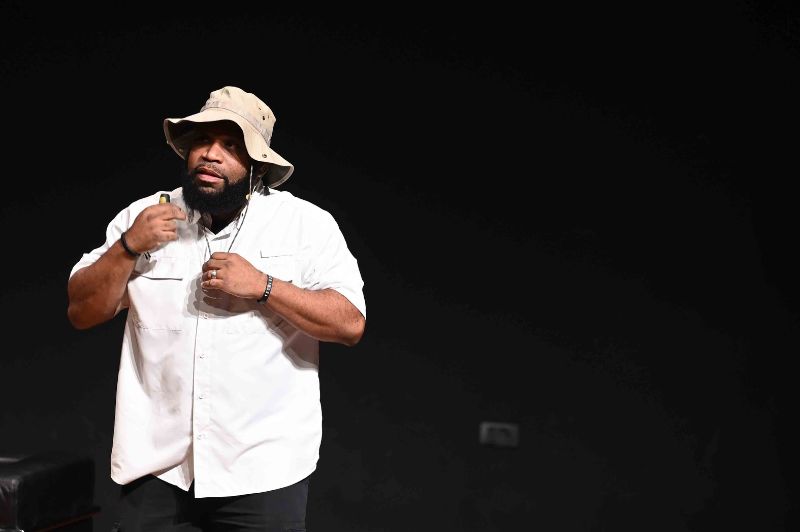

We were joined by Tariq King

TestingUy 2025 featured the participation of Tariq King, who delivered the opening keynote and a workshop.

Tariq King is the CEO of Test IO, a researcher, thought leader in artificial intelligence applied to software testing, and an influential voice in the international quality community.

It was truly inspiring to hear his vision and experience on this topic, his perspective on the Latin American market, especially the Uruguayan one, and to share conversations with him throughout the day’s activities.

Program

September 4 – Telecommunications Tower

CHECK-IN

WELCOME

Aníbal Banquero (Centro de Ensayos de Software), Guillermo Skrilec (QAlified), Gustavo Guimerans (Centro de Ensayos de Software), Yanaina López (QAlified)

KEYNOTE: Human Experience Testing: Redefining Quality in the Age of AI

Tariq King

Artificial Intelligence is rapidly becoming an accepted means of accelerating software engineering and testing activities. Many organizations have already integrated AI into their software development lifecycle, and the demand for AI-assisted engineering continues to grow year over year. A key motivating factor behind the demand is the promise of increased speed and efficiency through automation. Software testing is a prime candidate for AI acceleration due to the generally high costs and significant manual effort involved in validation and verification. As a result, we are seeing an exponential growth in the application and development of tools to support AI-driven test automation.

But what does the adoption of AI-driven automation mean for the future of software testing? Is AI going to replace manual testers and/or test automation engineers? It seems like these questions are quickly growing old and repetitive because no one is providing definitive answers based on practical, real-world analysis. Join industry expert Tariq King as he takes you on a journey into the future of software testing. Tariq firmly believes that AI will take over software testing and permanently transform the quality engineering landscape. He postulates that the future of testing will be focused on evaluating human experiences, including testing digital, physical, and experiential touchpoints. In the age of AI, human experience testing will not just be viewed as a quality assurance function, but as a core component of an organization’s business strategy. Welcome to the future of quality where AI specifies, designs, codes, and tests software, leaving the humans to do the one thing the machines can’t – test the human experience.

PANEL: Exploring the future of testing

Moderated by: Úrsula Bartram|Speakers: Cecilia Benassi, Guillermo Skrilec, Gustavo Guimerans

Software testing has come a long way: from manual approaches and rigid processes to agile methodologies, automation, and now the accelerating impact of artificial intelligence. But… where are we headed?

In this panel, three leading testing experts from the region will share their vision of the discipline’s evolution, its current state, and the challenges ahead. We’ll discuss how AI is redefining practices, roles, and skills, and explore what the community needs to do to adapt, grow, and continue delivering value in this new context.

COFFEE BREAK

TALK: How to test what we can’t predict? We’ll share our experience.

Gastón Marichal, Natalia Nario

Testing systems that integrate generative AI forced us to rethink many of the foundations and strategies of traditional testing. What once could be solved with a simple “pass or fail” now demands a deeper look: How accurate or correct is the response? Is it clear? Does it contain any bias?

In this talk, we’ll share how our journey at QAlified began: starting with a fully manual test process using Excel spreadsheets, analyzing responses one by one, and discovering on the fly what we really needed to do. We’ll walk you through how we gradually incorporated AI assistants to complement our analysis, evaluating key aspects such as formality, bias, completeness, and safety, using specific criteria for each. And finally, how this new way of testing pushed us to adapt to faster, more demanding cycles where automation became a key ally to achieving our goals.

A real story about how we transformed our testing process to meet the challenges posed by generative AI.

SPONSORED WORKSHOP: Automating Generative AI Testing: A Practical Workshop with Promptfoo

Abstracta|Renzo Parente, Laura Gayo, Vanessa Sastre

Large language models (LLMs) are making their way into real products, bringing the challenge of testing systems whose responses can change with each execution. This practical workshop focuses on using an open-source tool called Promptfoo to validate and test prompts and LLM-based functionalities. You’ll learn how to implement effective tests that adapt to the dynamic nature of LLM-generated responses, ensuring your AI applications perform reliably and optimally.

TALK: AI in Testing: Who Really Decides?

María Elisa Presto, Mariana Travieso

Artificial intelligence promises speed and accuracy: it generates tests, runs scripts, and delivers “flawless” reports. It seems like the hard work is already done. But is it really?

Behind this sense of security lies an invisible risk: accepting results without questioning them, letting autopilot decide for us, and losing our critical judgment. What happens when the automated system makes a mistake? What happens to our professional practice when we blindly trust AI?

In this talk, we’ll reflect—with concrete testing examples—on why we often behave as if what we see is all there is (WYSIATI) and explore practical tools to maintain our professional autonomy. An invitation to consider how to leverage AI without losing what’s essential: quality, responsibility, and our role as critical testers.

LUNCH - FREE TIME

TALK: QPAM: Empowering quality strategies at the team Level

Claudia Badell

Quality is not achieved through a single action, nor is it solely the tester’s responsibility: it’s a sustained practice built collectively within the team. The QPAM (Quality Practices Assessment Model) provides a structured way to review, discuss, and improve how each team approaches quality in their day-to-day work.

In this talk, I’ll share how to use QPAM as a tool to strengthen testing and quality strategies from within the team, sparking honest conversations about what we do, what we don’t do, and why.

We’ll explore how QPAM helps teams become aware of the current state of their practices, foster collaboration, and drive real improvements, aligned both with the technical needs of the context and the business goals.

WORKSHOP: Prompt Engineering for Software Quality Professionals

Tariq King

With the sudden rise of ChatGPT and large language models (LLMs), professionals have been attempting to use these types of tools to improve productivity. Building off prior momentum in AI for testing, software quality professionals are leveraging LLMs for creating tests, generating test scripts, automatically analyzing test results, and more. However, if LLM’s are not fed good prompts describing the task that the AI is supposed to perform, their responses can be inaccurate and unreliable, thereby diminishing productivity gains. Join Tariq King as he teaches you how to craft high-quality AI prompts and responsibly apply them to software testing and quality engineering tasks. After a brief walkthrough of how LLMs work, you’ll get hands-on with few-shot, role-based, and chain-of-thought prompting techniques. Learn how to adapt these techniques to your own use cases, while avoiding model “hallucinations” and adhering to your company’s security and compliance requirements.

TALK: Testing for everyone: Accessibility and a barrier-free digital experience

Natalia Barrios, Yannela Origoni

Digital accessibility is much more than a set of technical requirements: it’s a key dimension of user experience and a prerequisite for everyone to fully participate in digital environments. This talk offers a practical and understandable approach to integrating accessibility into testing.

Using the principles of universal design and the so-called “ramp effect,” we’ll explore how solutions designed for specific groups can end up benefiting all users. We’ll examine different modes of perception—visual, auditory, motor, and cognitive—and the most common barriers found in digital products. The session will also cover international standards (W3C’s WCAG) and current regulations in Uruguay, providing a clear framework for our work.

TALK: Pain-free Performance Testing: Common challenges and how to solve them without breaking the budget

Anisbert Suárez

Performance testing often seems costly, hard to plan, and frequently postponed due to lack of environments, tools, or time. But performance issues won’t wait.

In this talk, we’ll explore the typical challenges faced when trying to validate system performance with limited resources, and how to tackle them with a pragmatic approach, even testing in production in a controlled way. We’ll cover how to prioritize endpoints, use strategic mocking, avoid surprises with real data, and make informed decisions without major investments.

COFFEE BREAK

TALK: The B-Side of GenAI

Edgar Salazar

In this talk, we explore the often-overlooked aspects of Generative AI (GenAI): the security risks and potential threats. Beyond the promise of innovation, GenAI introduces a complex “B-side” that testing and security professionals need to understand. Through a practical walkthrough, we’ll break down the main vulnerabilities identified in LLMs, explain why model logic is so critical, and review the tactics and techniques attackers have used so far.

WORKSHOP: Automating your API with a Gen AI agent

Damián Pereira, Ayrton Solís, Juan Fagúndez, Manuel Buslón

Would you like to leave this workshop with a fully automated API and the method to replicate it in any project? In this hands-on session, we’ll put the **API Automation Agent** to the test an **open-source** tool that generates a complete API automation framework from OpenAPI specifications or Postman collections.

We’ll start with a presentation of the automation framework and review key concepts of API testing strategy. Then, each participant will clone the repository, run the agent against a sample API, and analyze the generated tests. From there, we’ll fix failing tests, add any missing ones, and set up a CI/CD pipeline to run them.

You’ll leave with a functional project, a step-by-step replicable guide, and the best practices we use daily to maintain healthy and scalable automation suites.

PANEL Videogames testing

Moderated by: Gerson Da Silva |Speakers: Fabián Rodríguez, Gabriel Artus, Gonzalo Martínez

Four leading professionals from Uruguay’s videogame industry will share their experiences and perspectives on testing in this sector. Through their journeys, we will explore how quality assurance tasks are carried out in an environment that blends creativity, technological innovation, and international market demands.

The discussion will cover the unique challenges of testing video games from Uruguay, as well as the opportunities and needs currently facing the local industry. The goal is to provide a comprehensive view that highlights not only the technical and organizational difficulties but also the growth potential and specialization opportunities in this field within the country.

KEYNOTE: GenAI: Our responsibility in testing to not ignore the wave

Federico Toledo

Since the beginning of the disruption caused by generative artificial intelligence (GenAI), we have been asking ourselves how it will transform testing and Quality Engineering practices. In these first few years, some companies have chosen to innovate based on GenAI, taking significant risks. However, most organizations have focused their efforts on secondary products, internal optimizations, and low-risk areas.

The need to align technological evolution and GenAI adoption with quality practices is becoming increasingly evident. Doing so will allow us to deliver software quickly to remain competitive, while maintaining the quality our users deserve, avoiding damage to both company reputation and business outcomes.

In this talk, we will address some of the most relevant questions for the future of testing in a GenAI-driven environment:

- How real are the productivity gains promised by GenAI in software development?

- What testing practices should we adopt to ensure quality in a GenAI-based context?

- Which GenAI-powered tools have a tangible impact across the software development lifecycle?

- Why is it crucial to keep humans involved in the process (human in the loop)?

- In a world of copilots and low-code tools, which mindset adds more value: development or testing?

- How should we prepare, and how can we leverage the AI wave to position Latam as a leader in software quality?

Through these questions, we will explore how testing teams can lead innovation, ensure quality, and manage risks in a future where artificial intelligence plays a key role at every stage of software development.

SWEEPSTAKES AND CLOSING

Aníbal Banquero (Centro de Ensayos de Software), Yanaina López (QAlified)

Organized by

Ana Inés González Lamé

Diego Gawenda

Facundo de Battista

Gustavo Mažeikis

Lucía Rodríguez

Ursula Bartram